In Agent Engineering, what does the perfect agent actually look like and how far away are we from that with today’s Planning Agents?

Mental Model: An all-knowing AGI Agent is really a perfect, just-in-time workflow generator & executor.

The all-knowing AGI Agent execution pattern:

- Take in a Task

- Decompose Task into an exact series of steps needed to complete it given the tools available

- Dynamically generate new tools if existing tools do not provide a path to completion

- Execute steps

But today’s agents are imperfect. Modern Planning Agents are just-in-time, adaptive workflow generators. Let’s define each of those terms.

Glossary:

Workflows: Systems where LLMs and tools are orchestrated through predefined code paths. Definition from Anthropic)

Planning Agents: Agents that decompose a Task into a series of concrete steps (an in-memory workflow definition) needed to achieve the goal. A common UX here is the ToDo List.

Just-in-time: Plans are generated at runtime, not predetermined like in traditional workflows

Adaptive: Agents can refine their plans during execution. Plan refinement can be based on user feedback, additional context, examining the outputs of tests, etc.

When an agent starts up, it’s completely unconstrained in what it should generate. Today, planning is the way we reliably “discretize” this massive action space into a set of pre-defined steps to execute. Let’s talk about how to do that well.

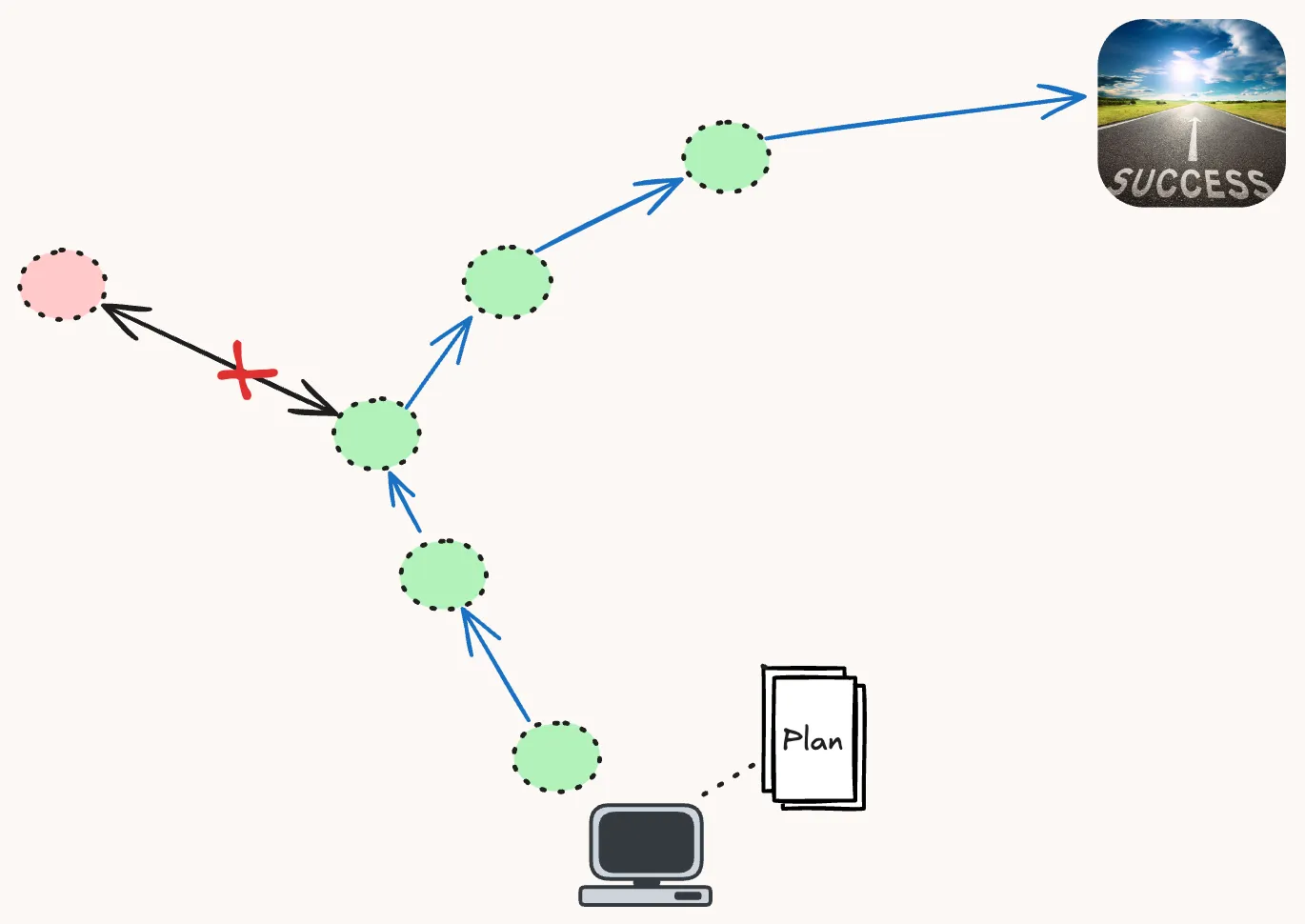

Plan generation gives the agent a discrete sequence of steps to follow. When errors are discovered (by a user, based on test context, etc) the agent can replan to change its trajectory.

Plan generation gives the agent a discrete sequence of steps to follow. When errors are discovered (by a user, based on test context, etc) the agent can replan to change its trajectory.

Agent Engineering is how to Generate the “Right Steps”

When you give a planning agent a Task, it’s sampling a trajectory over the set of all possible workflows. Generally, the correct trajectory for a Task is unknowable beforehand. And from the starting place of your Task and Agent Harness (prompts, tools, context), most of these trajectories are dead ends, but some will succeed. So the obvious question is, how do we sample the correct trajectories?

To answer this, let’s walk through a beloved example (agentic coding). Here’s a possible trajectory, take note of the key words and break points:

- Agent Thinks through the Task and generates a plan as a ToDo list.

- Agent is running, checking off ToDos. The user interrupts noticing that the agent’s current approach contains an error.

- Agent reflects on feedback and generates a new plan and updates the ToDos. Execution resumes.

- The agent generates and runs test cases for an intermediate step, some fail. The agent examines the errors, realizes it needs to read documentation for an outdated library, reads it from a local file, and implements a fix. All tests now pass.

- The agent checks off all ToDos. Tests pass. User accepts. Task is complete.

Now i hate to say it, but the real world is significantly more painful than that, but you get the idea. Still, many Agent Engineering principles fall neatly into this mental model. For example:

Context & Harness Engineering Context and Harness design are the most user controlled knobs for increasing the probability of success. Poor design can make it so there’s no to few available trajectories to success. Design includes careful engineering of Prompts, Tools/MCPs/Skills, Context/Docs, Subagent definitions, and more. Good tool design includes having the right tools for the desired functionality and building Compound Tools as semantic actions to reduce error surface area across many tool calls. Context engineering includes writing detailed prompts to guide behavior and having important documentation readily available (like we saw above with the agent doing this from a local file). I cover Harness Engineering in more depth in a previous blog.

Thinking and Replanning Thinking is a trimming mechanism to search for potential high probability trajectories based on world experience from training. This brings up the issue of why agents can be so bad at Out of Distribution tasks, aka Karpathy’s experience building NanoChat, but that’s for another time. Replanning is the mechanism of resampling a trajectory based on the current state of the code (or any task). And replanning gets much better with better context/signal to the model for which direction to go in such as user feedback or logs from tests.

Reliability Engineering This is simply “can we get the agent to generate and correctly execute on this series of steps consistently?” When “agents are unreliable”, it’s because they cannot generate and execute on in-memory workflow across the relevant tasks.

So now we have a mental model of how agents are sampling and resampling workflows dynamically, in-memory during their runtime execution. Let’s end by discussing where agents fit into traditional, deterministic workflows and the new products of Agentic Workflow Builders.

Agents are Universal “Bridge Nodes” in Workflow Builders

Modern workflow builders (ex: n8n)sound great. You put in the work upfront to map your Task into a set of pre-built, deterministic, nodes. But once things get complex, you’re living in if-else hell, enumerating all possible paths that your workflow must handle. We need an easy way to collapse all of the if-else nodes into a flexible handler.

Agents are the universal Bridge Node.

Bridge Nodes are nodes that sit between two potentially incompatible workflow steps during execution. Agents are very good at generating small scoped functions so they’re ideal adapters between nodes for small tasks like reformatting. Here are some examples of Bridge Node behavior, common patterns include judging inputs and validating/reformatting user uploads.

- User Format Compatibility: User submits a CSV with different column names than expected [person, email, number, address] instead of [name, email, phone, address]. Bridge node reads CSV and outputs correct format. In this case it’s impossible to enumerate all inputs, and maybe equally impossible to have users follow a schema.

- Judging/Refining Inputs: Part of a workflow involves image generation and the next node requires a person to be in the frame to work. An agent bridge node judges the input image and gates the passage to the next node, it sends back a new prompt, executing retries until a person is in the picture.

- Schema Evolution: An external API keeps changing its response structure with extra and differently named keys, sometimes returning just strings. The validator expects a fixed format (

{name:..., email: ...}) for the next API call. A bridge node extracts and formats the input for the next API call.

Dynamically Generating Bridge Nodes During Workflow Building

For products like OpenAI’s AgentKit Agentic Bridge Nodes will be the default to fill gaps between pre-built nodes. A likely path is that the default interaction mechanism with AgentKit (and similar products) will be an agent itself:

- Users chat with Workflow Builder Agent and give it a Task

- Agent Creates a Plan for the workflow nodes and maps each step to a set of existing, deterministic nodes.

- The agent has access to a

GenerateNode()tool to be used when no existing nodes can complete the Task. The Agent can choose to write deterministic code to bridge the nodes or it can insert an AgentNode in the loop to handle the bridging, this will depend on how easy it is to enumerate the paths between the nodes. - User reviews, tests, and refines with the agent

That’s a wrap. We went over:

- A useful mental model for understanding how the dream of an AGI Agent is really a perfect workflow generator

- How modern planning agents are imperfect but steerable in-memory workflow generators

- The importance of an agentic bridge node primitive as a way to make existing agentic workflow building products better

Happy building!